A Practical Approach to Enterprise AI Success: Moving from Hype to Results

Bobby Hyam is currently a Solutions Engineer at Glean. All opinions are his own.

Enterprise AI adoption is at a critical inflection point. Whilst 78% of organisations are now using AI in at least one business function, more than 80% aren't seeing tangible impact on their bottom line. Meanwhile, 74% of companies struggle to achieve and scale value from their AI initiatives.

The challenge isn't a lack of AI tools or capabilities - it's the gap between promise and execution. After working with countless organisations navigating their AI journeys, I've seen the patterns that separate success from failure. The companies that achieve real results don't just adopt AI; they approach it strategically.

This article outlines a practical framework for enterprise AI success, drawing from proven change management principles and real-world implementation experience. Rather than adding to the hype, let's focus on what actually works.

The Vendor Landscape Challenge

We're living through the biggest venture capital gold rush in tech history, with an absurd amount of money pumped into AI startups. This has created thousands of new businesses all promising to revolutionise your company - and most of them have hired salespeople or built automated AI tools that are cold-calling you right now for your attention.

You might think you're safer sticking with the big, established players you've worked with for years. But here's the uncomfortable truth: none of them have built best-in-class AI products yet. Microsoft Copilot, Google's Gemini, Amazon's Q for Business - the adoption feedback hasn't exactly been mind-blowing.

While nearly 70% of Fortune 500 companies have integrated Microsoft 365 Copilot into their workflows, recent research shows mixed results. A comprehensive study of M365 Copilot users found that for "opportunities for innovation and creativity," 60% of participants remained neutral, suggesting Copilot hasn't made meaningful contributions in these areas.

These giants are struggling to reorient their massive organisations around AI opportunities, and they're nowhere near as nimble as the emerging players.

The answer isn't choosing between startups and established players - it's having a structured approach to navigate both options strategically. But first, let's understand why most organisations struggle with this decision.

Why Most AI Vision-Building Approaches Create Their Own Problems

I've seen three common approaches to building an AI vision, and each one creates its own headaches:

The Top-Down Approach

A leader takes charge, forms a small group for market research and experimental pilots. Keeping the circle small can drive action faster, but unless that leader truly understands the work happening at the individual contributor level, they'll solve the wrong problems.

The Committee Approach

Organisations form large, cross-functional AI committees involving multiple departments. This brings diverse ideas and ensures you turn over more stones looking for gems. But unless it's optimised for speed, you get into evaluation cycles that never end. I see many organisations who evaluate tool after tool, never pulling the trigger because they want the perfect solution that delivers on every idea they have.

The challenge is significant: whilst 90% of employees are using generative AI for work, only 13% consider their organisation to be an early adopter, highlighting the gap between individual enthusiasm and organisational transformation. Even more concerning, only 22% of employees report receiving adequate AI support, which drives them to find their own solutions.

The Delegation Approach

Leaders take a hands-off approach, delegating vision-building to a technical person or solutions architect. Depending on who's leading, they might build quickly without enough planning or struggle to create an achievable vision at all.

Most companies I work with have tried at least one of these approaches and gotten stuck. Each has merit, but they all miss crucial elements needed for sustainable AI transformation.

A Strategic Framework That Works

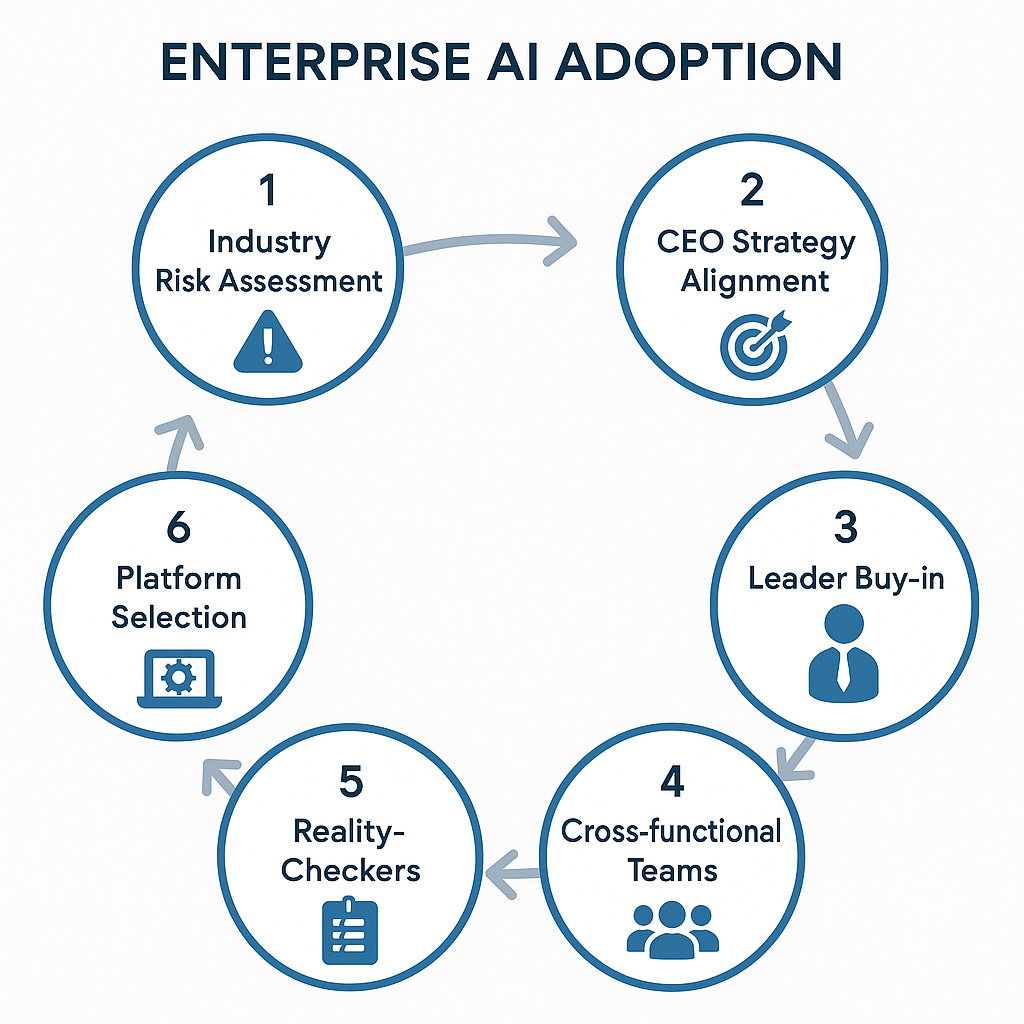

Here's what I've observed works better, based on what successful companies actually do. This approach draws from Professor John Kotter's decades of change management research, adapted specifically for AI transformation challenges.

Although I reference the Kotter model, I'm not suggesting you implement this entire framework rigidly. You should aim to get these steps done quickly with a bias for action, not worry about doing everything by the book. The key is ensuring you address each element, even if briefly.

Step 1: Start With Industry Risk Assessment (Create Urgency)

The first question isn't "What can AI do for us?" It's "What's the competitive urgency in our industry?"

This aligns with Kotter's first principle: create a sense of urgency by identifying the crisis or opportunity that demands immediate action. In AI transformation, the urgency comes from competitive risk assessment.

If AI presents huge and immediate danger or opportunity in your sector, you need to take risks and experiment with emerging technologies. If you're in a slow-moving, highly regulated industry or have little competition (lucky you!), you can afford to stick with existing partners as their products improve.

For everyone in the middle - which is most of you - it becomes a game of tipping the odds in your favour.

Step 2: Focus on Functional Areas Aligned With CEO Strategy (Build Vision)

Once you've established your risk tolerance, look at specific functional areas of your business. Which function is most important to your current CEO's strategy?

If growth is the priority, maybe it's sales. If cost-cutting and consolidation matter most, perhaps focus on areas with expensive people doing repetitive work that could be automated.

I can't emphasise this enough: start with top-level business objectives and work down to functional areas. AI can positively impact any area, so why not target the ones you care most about? This follows Kotter's principle of forming a strategic vision - but anchored in existing business priorities rather than abstract AI possibilities.

Step 3: Get Functional Leaders Excited (Build Coalition)

Once you've selected your functional area, the leader needs to be genuinely excited about what AI can do for them. If this is where your biggest AI opportunity lies and they're not enthusiastic, you might have bigger organisational problems to solve first.

This reflects Kotter's emphasis on building a guiding coalition with committed leaders who have the power and credibility to drive change. In AI transformation, functional leadership buy-in is absolutely critical.

Step 4: Build Cross-Functional Analysis Teams (Enlist Volunteer Army)

Involve enthusiastic volunteers from all levels of your chosen business units, plus cross-functional representatives. I'm always an advocate for making these programmes cross-functional even when they seem department-specific - you never know what insights an outside perspective might uncover.

Here's where Kotter's research becomes particularly relevant: his concept of enlisting a volunteer army emphasises that volunteers - people who actively want to contribute - are far more effective than assignees who are told to participate. The data supports this: volunteer-driven change initiatives have significantly higher success rates because participants are intrinsically motivated.

Analyse the real problems: What's slowing you down from reaching objectives? Where could you accelerate to make the boat go faster? Where are people investing time in manual, repetitive work? Is there valuable data somewhere that's too time-consuming to process manually?

Step 5: Bring in Reality-Checkers Early

Now you need people familiar with realistic AI capabilities today. Involve smart, enthusiastic technologists from your organisation and bring in external vendors to discuss your specific challenges.

Here's a mistake I see constantly: trying to finalise your use case list before involving third-party solution providers. You probably don't have all the knowledge internally to determine what's feasible. The data backs this up - 76% of organisations report a severe lack of AI professionals within their organisations, making external expertise crucial for successful AI adoption.

However, one thing you should do before engaging vendors or other experts is to draft a list of potential use cases - even if it's a bit vague and unclear. This makes their jobs much easier so they can quickly see where they might help and where they can't. For each use case, try to collect these data points:

Business owner: Who actually runs this process day-to-day?

Executive sponsor: The person who owns the budget that would pay for this solution

Data sources: What systems and data would need to be integrated?

Current pain points: What are the negative consequences of the current situation?

Expected savings: What money or time savings could an AI solution bring?

It's very difficult to do, but try to get those doing this investigative work to identify metrics that executive sponsors and budget owners actually care about. Executives - you can help by making this clear upfront! If your team can explain how the solution will help in terms of these specific metrics, it'll be much easier to get the green light later.

But here's the challenge with taking a very structured approach to this: AI is moving incredibly fast. Take Glean, for example. Six months ago, we were an enterprise search product. Now we're an agentic AI automation platform. I'm sure in another 6-12 months the product will look very different again.

It's not just the software - the improvements in underlying AI models make more and more ideas possible with every release, and it's honestly hard to keep up. What seemed impossible six months ago is now table stakes, and what's cutting-edge today might be commodity tomorrow.

This means your planning needs to balance structure with agility. You could hire consulting firms for capability analysis, but hint: you can also do much of this research yourselves with AI. Just make sure you get the right prompts in place so the AI doesn't just tell you all your ideas are fabulous!

Step 6: Navigate the "Nothing Does Everything" Problem

After conversations with multiple vendors, you'll face another difficult reality: no tool does everything you want. They all have overlapping capabilities, but none is perfect. You're stuck paying twice for overlapping functionality whilst confusing users with multiple similar tools.

There's no easy answer, but these approaches can help:

Use the Eisenhower Matrix: Organise use cases by low/high impact versus low/high effort. You can also create a low/high risk version. Pick use cases and tools in the low-effort, high-impact quadrant first. This might sound basic, but you'd be surprised how many organisations skip this step.

Evaluate Foundational Platforms First: Look for technologies or platforms you can deploy organisation-wide that will accelerate and de-risk multiple use cases across departments. If they can help you tackle several high-impact use cases faster than building from scratch, that's a major win.

Once you have some foundational platforms in place, it stops new ideas from making full stack technology decisions about what services and software to use at every layer. There is a case for doing this, but building on top of your standard technology platform should be the first choice and only when that has been disqualified do you go to custom build or to specialist software solutions.

Now, you could be forgiven for thinking that my current role at Glean - which provides one of these foundational platforms - drives this opinion. Actually, it's the opposite: I bet my career on Glean precisely because I believe that foundational platforms like Glean will be essential to allowing organisations to deliver value at scale, rapidly.

The evidence supports this approach. Companies that establish clearly defined roadmaps and dedicated teams for AI adoption are significantly more likely to see positive bottom-line impact. McKinsey's research shows that organisations following structured adoption practices are more than twice as likely to achieve meaningful results - a finding that aligns perfectly with Kotter's research showing that over 70% of major change efforts using his methodology are successful.

Managing the Idea Tsunami

Here's something crucial that many organisations miss: even with a structured approach, you're going to get a torrent of new ideas for AI projects popping up all the time. Once word spreads that you're "doing AI," everyone will have suggestions.

Set up a process for capturing these ideas and assessing them for business value and level of effort. More importantly, establish clear timelines for responding to users who submit ideas and always explain the decisions for how and why they were prioritised.

This might seem like bureaucratic overhead, but it's essential. If people don't see transparency in your decision-making process, they'll assume you're not listening - and that's when they go off building their own shadow AI initiatives, using whatever tools they can find. That's exactly how you end up with the data leakage and security risks we discussed earlier.

How you evaluate these technologies is crucial, and different approaches work for different organisations. Some take very structured approaches with detailed technical assessments, whilst others have had success with more subjective methods based on user feedback and pilot results. In a future article, we'll discuss specifically how to evaluate AI technologies and which approach might work best for your situation.

Specifically, you need both code-based and no-code approaches to building AI agents. Reserve code-based solutions for highly specialised use cases requiring significant customisation and investment. A broad, no-code platform can often enable innovation at scale for a large proportion of your use cases.

The technical architecture that supports this approach is crucial - how you structure your data, security, and integration layers will determine whether AI innovation thrives or gets bogged down in compliance concerns. In a future article, we'll go over the enterprise technical architecture that we expect will maximise AI innovation whilst protecting the company from the inherent risks.

Moving Forward

Here's the reality check: you can't afford to sit still and do nothing, and that includes getting paralysed by endless evaluation cycles.

The AI transformation is happening whether you participate or not. The question is whether you'll approach it strategically or reactively.

Companies that follow structured approaches are seeing real results. The key is balancing urgency with thoughtfulness - moving fast enough to stay competitive whilst being methodical enough to avoid the pitfalls that trap so many organisations.

But given how quickly AI capabilities are evolving, don't let perfect planning become the enemy of good action. Find projects at a comfortable level of investment and risk for you and just get started. The learning from real implementation will be more valuable than months of theoretical planning.

If you're in doubt, identify a couple of high-reward, low-effort, low-risk opportunities and have a go. The perfect strategy doesn't exist, but a good strategy executed beats a perfect strategy that never gets started.

The facts on AI might be complicated, but the path forward doesn't have to be.